It would be irrational for us to assign a very high prior probability to the thesis that spiky teal fruit is a healthy food.

If a species evolved to naturally assign a very high prior probability to the thesis that spiky teal fruit is a healthy food, it would not be irrational for them to do this.

So, what prior probabilities are rational is species relative.

Monday, February 27, 2023

Species relativity of priors

Reducing exact similarity

It is a commonplace that while Platonists need to posit a primitive instantiation relation for a tomato to stand in to the universal redness, trope theorists need an exact similarity relation for the tomato’s redness to stand in to another object’s redness, and hence there is no parsimony advantage to Platonism.

This may be mistaken. For the Platonist needs a degreed or comparative similarity relation, too. It seems to be a given that maroon is more similar to burgundy than blue is to pink, and blue is more similar to pink than green is to bored. But given a degreed or comparative similarity relation, there is hope for defining exact similarity in terms of it. For we can say that x and y are exactly similar provided that it is impossible for two distinct objects to be more similar than x and y are.

That said, comparative similarity is perhaps too weird and mysterious. There are clear cases, as above, but then there are cases which are hard to make sense of. Is maroon more or less similar to burgundy than middle C is to middle B? Is green more or less similar to bored than loud is to quiet?

Friday, February 24, 2023

Do particles have a self-concept?

Of course not.

But consider this. A negatively charged substance has the power to attract other substances to itslf. Its causal power thus seems to have a centeredness, a de se character. The substance’s power somehow distinguishes between the substance itself and other things.

Put this way, Leibniz's (proto?)panpsychism doesn’t seem that far a departure from a more sedate commitment to causal powers.

Thursday, February 23, 2023

Morality and the gods

In the Meno, we get a solution to the puzzle of why it is that virtue does not seem, as an empirical matter of fact, to be teachable. The solution is that instead of involving knowledge, virtue involves true belief, and true belief is not teachable in the way knowledge is.

The distinction between knowledge and true belief seems to be that knowledge is true opinion made firm by explanatory account (aitias logismoi, 98a).

This may seem to the modern philosophical reader to confuse explanation and justification. It is justification, not explanation, that is needed for knowledge. One can know that sunflowers turn to the sun without anyone knowing why or how they do so. But what Plato seems to be after here is not merely justified true belief, but something like the scientia of the Aristotelians, an explanatorily structured understanding.

But not every area seems like the case of sunflowers. There would be something very odd in a tribe knowing Fermat’s Last Theorem to be true, but without anybody in the tribe, or anybody in contact with the tribe, having anything like an explanation or proof. Mathematical knowledge of non-axiomatic claims typically involves something explanation-like: a derivation from first principles. We can, of course, rely on an expert, but eventually we must come to something proof-like.

I think ethics is in a way similar. There is something very odd about having justified true belief—knowledge in the modern sense—of ethical truths but not knowing why they are true. Yet it seems humans are often in this position. They know the ethical truths but not why they are true. Yet they have correct, and maybe even justified, moral judgments about many things. What explains this?

Socrates’ answer in the Meno is that it is the gods. The gods instill true moral opinion in people (especially the poets).

This is not a bad answer.

Saving a Newtonian intuition

Here is a Newtonian intuition:

- Space and time themselves are unaffected by the activities of spatiotemporal beings.

General Relativity seems to upend (1). If I move my hand, that changes the geometry of spacetime in the vicinity of my hand, since gravity is explained by the geometry of spacetime and my hand has gravity.

It’s occurred to me this morning that a branching spacetime framework can restore the Newtonian intuition of the invariance of space. Suppose we think of ourselves as inhabiting a branching spacetime, with the laws of nature being such as to require all the substances to travel together (cf. the traveling forms interpretation of quantum mechanics). Then we can take this branching spacetime to have a fixed geometry, but when I move my hand, I bring it about that we all (i.e., all spatiotemporal substances now existing) move up to a branch with one geometry rather than up to a branch with a different geometry.

On this picture, the branching spacetime we inhabit is largely empty, but one lonely red line is filled with substances. Instead of us shaping spacetime, we travel in it.

I don’t know if (1) is worth saving, though.

Wednesday, February 22, 2023

From a determinable-determinate model of location to a privileged spacetime foliation

Here’s a three-level determinable-determinate model of spacetime that seems somewhat attractive to me, particularly in a multiverse context. The levels are:

Spatiotemporality

Being in a specific spacetime manifold

Specific location in a specific spacetime manifold.

Here, levels 2 and 3 are each a determinate of the level above it.

Thus, Alice has the property of being at spatiotemporal location x, which is a determinate of the determinable of being in manifold M, and being in manifold M is a determinate of the determinable of spatiotemporality.

This story yields a simple account of the universemate relation: objects x and y are universemates provided that they have the same Level 2 location. And spatiotemporal structure—say, lightcone and proper distance—is somehow grounded in the internal structure of the Level 2 location determinable. (The “somehow” flags that there be dragons here.)

The theory has some problematic, but very interesting, consequences. First, massive nonlocality, both in space and in time, both backwards and forwards. What spacetime manifold the past dinosaurs of Earth and the present denizens of the Andromeda Galaxy inhabit is partly up to us now. If I raise my right hand, that affects the curvature of spacetime in my vicinity, and hence affects which manifold we all have always been inhabiting.

Second, it is not possible to have a multiverse with two universes that have the same spacetime structure, say, two classical Newtonian ones, or two Minkowskian ones.

To me, the most counterintuitive of the above consequences is the backwards temporal nonlocality: that by raising my hand, I affect the level 2 locational properties, and hence the level 3 ones as well, of the dinosaurs. The dinosaurs would literally have been elsewhere had I not raised my hand!

What’s worse, we get a loop in the partial causal explanation relation. The movement of my hand affects which manifold we all live in. But which manifold we all live in affects the movement of the objects in the manifold—including that of my hand.

The only way I can think of avoiding such backwards causation on something like the above model is to shift to some model that privileges a foliation into spacelike hypersurfaces, and then has something like this structure:

Spatiotemporality

Being in a specific branching spacetime

Being in a specific spacelike hypersurface inside one branch

Specific location within the specific spacelike hypersurface.

We also need some way to handle persistence over time. Perhaps we can suppose that the fundamentally located objects are slices or slice-like accidents.

I wonder if one can separate the above line of thought from the admittedly wacky determinate-determinable model and make it into a general metaphysical argument for a privileged foliation.

Tuesday, February 21, 2023

Achievement in a quantum world

Suppose Alice gives Bob a gift of five lottery tickets, and Bob buys himelf a sixth one. Bob then wins the lottery. Intuitively, if one of the tickets that Alice bought for Bob wins, then Bob’s win is Alice’s achievement, but if the winning ticket is not one of the ones that Alice bought for Bob, then Bob’s win is not Alice’s achievement.

But now suppose that there is no fact of the matter as to which ticket won, but only that Bob won. For instance, maybe the way the game works is that there is a giant roulette wheel. You hand in your tickets, and then an equal number of depressions on the wheel gets your name. If the ball ends in a depression with your name, you win. But they don’t write your name down on the depressions ticket-by-ticket. Instead, they count up how many tickets you hand them, and then write your name down on the same number of depressions.

In this case, it seems that Bob’s win isn’t Alice’s achievement, because there is no fact of the matter that it was one of Alice’s tickets that got Bob his win. Nor does this depend on the probabilities. Even if Alice gave Bob a thousand tickets, and Bob contributed only one it seems that Bob’s win isn’t Alice’s achievement.

Yet in a world run on quantum mechanics, it seems that our agential connection to the external world is like Alice’s to Bob’s win. All we can do is tweak the probabilities, perhaps overwhelmingly so, but there is no fact of the matter about the outcome being truly ours. So it seems that nothing is ever our achievement.

That is an unacceptable consequence, I think.

I think there are two possible ways out. One is to shift our interpretation of “achievement” and say that Bob’s win is Alice’s achievement in the original case even when it was the ticket that Bob bought for himself that won. Achievement is just sufficient increase of probability followed by the occurrence of the thus probabilified event.

The second is heavy duty metaphysics. Perhaps our causal activity marks the world in such a way that there is always a trace of what happened due to what. Events come marked with their actual causal history. Sometimes, but not always, that causal history specifies what was actually the cause. Perhaps I turn a quantum probability dial from 0.01 to 0.40, and you turn it from 0.40 to 0.79, and then the event happens, and the event comes metaphysically marked with its cause. Or perhaps when I turn the quantum probability dial and you turn it, I embue it with some of my teleology and when you turn it, you embue it with some of yours, and there is a fact of the matter as to whether a further on down effect comes from your teleology or mine.

I find the metaphysical answer hard to believe, but I find the probabilistic one conceptually problematic.

Continuity, scoring rules and domination

Pettigrew claimed, and Nielsen and I independently proved (my proof is here) that any strictly proper scoring rule on a finite space that is continuous on the probabilities has the domination property that any non-probability is strictly dominated in score by some probability.

An interesting question is how far one can weaken the continuity assumption. While I gave necessary and sufficient conditions, those conditions are rather complicated and hard to work with. So here is an interesting question: Is it sufficient for the domination property that the scoring rule be continuous at all the regular probabilities, those that assign non-zero values to every point, and finite?

I recently posted, and fairly quickly took down, a mistaken argument for a negative answer. I now have a proof of a positive answer. It took me way too long to get that positive answer, when in fact it was just a simple geometric argument (see Lemma 1).

Slightly more generally, what’s sufficient is that the scoring rule be continuous at all the regular probabilities as well as at every point where the score is infinite.

Saturday, February 18, 2023

Two tweaks for Internet Arcade games on archive.org

javascript:MAMELoader.extraArgs=(z)=>({extra_mame_args:['-mouse','-keepaspect']});AJS.emulate()

(Note that Chrome will not let you past the "javascript:" prefix into the URL bar. You can copy and paste the rest, but you have to type "javascript:" manually.) The game will start, and the mouse will be enabled. You can add other MAME options if you like.

You can also turn this into a bookmarklet. Indeed, you can just drag this to your bookmark bar: Emularity Mouse .

There is still a minor aspect ratio problem. If you go to fullscreen and go back to windowed view, the aspect ratio will be bad in windowed mode.

Friday, February 17, 2023

Curmudgeonly griping

One of the standard gripes about modern manufacturing is how many items break down because the manufacturer saved a very small fraction of the price, sometimes only a few cents. I find myself frequently resoldering mice and headphones, presumably because the wires were too thin, but there at least there is a functionality benefit from thin wires.

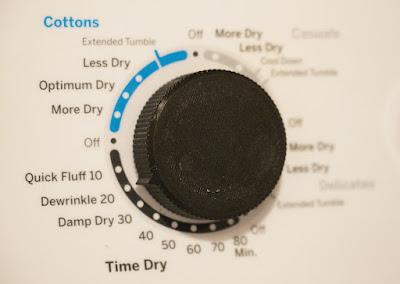

The most recent is our GE dryer where the timer knob always felt flimsy, and finally the plastic holding the timer shaft cracked. The underside revealed thin-walled plastic holding the timer shaft, reinforced with some more chambers made of thin-walled plastic. Perhaps over-reacting, I ended up designing a very chunky 3D-printed one.

I suppose there is an environmental benefit from using less plastic, but it needs to be offset against the environmental cost of repair and replacement. Adding ten grams of plastic would have easily made the knob way, way stronger, and that's about 0.01 gallons of crude oil, which is probably an order of magnitude less than the crude oil someone might use to drive to the store for a replacement (or a repair person being called in; in our case, it wasn't obvious that the knob was the problem; I suspected the timer at first, and disassembled it, before finally realizing that the knob had an internal crack that made it impossible for it to exert the needed torque).

Monday, February 13, 2023

Strictly proper scoring rules in an infinite context

This is mostly just a note to self.

In a recent paper, I prove that there is no strictly proper score s on the countably additive probabilities on the product space 2κ where we require s(p) to be measurable for every probability p and where κ is a cardinal such that 2κ > κω (e.g., the continuum). But here I am taking strictly proper scores to be real- or extended-real valued. What if we relax this requirement? The answer is negative.

Theorem: Assume Choice. If 2κ > κω and V is a topological space with the T1 property and ≤ is a total pre-order on V. Let s be a function s from the extreme probabilities on Ω = 2κ, with the product σ-algebra, concentrated on points to the set of measurable functions from Ω to V. Then there exist extreme probabilities r and q concentrated at distinct points α and β respectively such that s(r)(α) ≤ s(q)(α).

A space is T1

iff singletons are closed.

Extreme probabilities only have 0 and

1 values. An extreme probability p is concentrated at α provided that p(A) = 1 if α ∈ A and p(A) = 0 otherwise. (We can

identify extreme probabilities with the points at which they are

concentrated, as long as the σ-algebra separates points.) Note

that in the product σ-lagebra

on 2κ singletons

are not measurable.

Corollary: In the setting of the Theorem, if Ep is a V-valued prevision from V-valued measurable functions to V such that (a) Ep(c⋅1A) = cp(A) always, (b) if f and g are equal outside of a set with p-measure zero, then Epf = Epg, and (c) Eps(q) is always defined for extreme singleton-concentrated p and q, then the strict propriety inequality Ers(r) > Ers(q) fails for some distinct extreme singleton-concentrated p and q.

Proof of Corollary: Suppose p is concentrated at α and Epf is defined. Let Z = {ω : f(ω) = f(α)}. Then p(Z) = 1 and p(Zc) = 0. Hence f is p-almost surely equal to f(α) ⋅ 1Z. Thus, Epf = f(α). Thus if r and q are as in the Theorem, Ers(r) = s(r)(α) and Ers(q) = s(q)(α), and our result follows from the Theorem.

Note: Towards the end of my paper, I suggested that the unavailability of proper scores in certain contexts is due to the limited size of the set of values—normally assumed to be real. But the above shows that sometimes it’s due to measurability constraints instead, as we still won’t have proper scores even if we let the values be some gigantic collection of surreals.

Proof of Theorem: Given an extreme probability p and z ∈ V, the inverse image of z under s(p), namely Lp, z = {ω : s(p) = z}, is measurable. A measurable set A depends only on a countable number of factors of 2κ: i.e., there is a countable set C ⊆ κ such that if ω, ω′ ∈ κ agree on C, and one is in A, so is the other. Let Cp ⊂ κ be a countable set such that Lp, s(p)(ω) depends only on Cp, where ω is the point p is concentrated on (to get all the Cp we need the Axiom of Choice). The set of all the countable subsets of κ has cardinality at most κω, and hence so does the set of all the Cp. Let Zp = {q : Cq = Cp}. The union of the Zp is all the extreme point-concentrated probabilities and hence has cardinality 2κ. There are at most κω different Zp. Thus for some p, the set Zp has cardinality bigger than κω (here we used the assmption that 2ω > κω).

There are q and r in Zp concentrated at α and β, respectively, such that α∣Cp = β∣Cp (i.e., α and β agree on Cp). For the function (⋅)∣Cp on the concentration points of the probabilities in Zp has an image of cardinality at most 2ω ≤ κω but Zp has cardinality bigger than κω. Since α∣Cp = β∣Cp, and Lq, s(q)(α) and Lr, s(r)(β) both depend only on the factors in Cp, it follows that s(q)(β) = s(q)(α) and s(r)(α) = s(r)(β). Now either s(q)(β) ≥ s(r)(α) or s(q)(β) ≤ s(r)(α) by totality. Swapping (q,α) with (r,β) if necessary, assume s(q)(α) = s(q)(β) ≤ s(r)(α).

Fundamentality and anthropocentrism

Say an object is grue if it is observed before the year 2100 and green, or it is blue but not observed before 2100. Then it is reasonable to do induction with “green” but not with “grue”: our observations of emerald color fit equally well with the hypotheses that emeralds are green and that they are grue, but it is the green hypothesis that is reasonable.

A plausible story about the relevant difference between “green” and “grue” is that “green” is significantly closer to being a “perfectly natural” or “fundamental” property than “grue” is. If we try to define “green” and “grue” in fundamental scientific vocabulary, the definition of “grue” will be about twice as long. Thus, “green” is projectible but “grue” is not, to use Goodman’s term.

But this story has an interesting problem. Say that an object is pogatively charged if it is observed before Planck time 2n and positively charged or it negatively charged but not observed before Planck time 2n. By the “Planck time”, I mean the proper time from the beginning of the universe measured in Planck times, and I stipulate that n is the smallest integer such that 2n is in our future. Now, while “pogatively charged” is further from the fundamental than “positively charged”, nonetheless “pogatively charged” seems much more fundamental than “green”. Just think how hard it is to define “green” in fundamental terms: objects are green provided that their emissive/refractive/reflective spectral profile peaks in a particular way in a particular part of the visible spectrum. Defining the “particular way” and “particular part” will be complex—it will make make reference to details tied to our visual sensitivities—and defining “emissive/refractive/reflective”, and handling the complex interplay of these, is tough.

One move would be to draw a strong anti-reductionist conclusion from this: “green” is not to be defined in terms of spectral profiles, but is about as close to fundamentality as “positively charged”.

Another move would be to say that projectibility is not about distance to fundamentality, but is legitimately anthropocentric. I think kind of anthropocentrism is only plausible on the hypothesis that the world is made for us humans.

Monday, February 6, 2023

Eliminating contingent relations

Here’s a Pythagorean way to eliminate contingent relations from a theory. Let’s say that we want to eliminate the relation of love from a theory of persons. We suppose instead that each person has two fundamental contingent numerical determinables: personal number and love number, both of which are positive integers, with each individual’s personal number being prime and an essential property of theirs. Then we say that x loves y iff x’s love number is divisible by y’s personal number. For instance suppose we have three people: Alice, Bob and Carl, and Alice loves herself and Carl, Bob loves himself and Alice, and Carl loves no one. This can be made true by the following setup:

| Alice | Bob | Carl | |

|---|---|---|---|

| Personal number | 2 | 3 | 5 |

| Love number | 10 | 6 | 1 |

While of course my illustration of this in terms of love is unserious, and only really works assuming love-finitism (each person can only love finitely many people), the point generalizes: we can replace non-mathematical relations with mathematizable determinables and mathematical relations. For instance, spatial relations can be analyzed by supposing that objects have a location determinable whose possible values are regions of a mathematical manifold.

This requires two kinds of non-contingent relations: mathematical relations between the values and the determinable–determinate relation. One may worry that these are just as puzzling as the contingent ones. I don't know. I've always found contingent relations really puzzling.

Thursday, February 2, 2023

Rethinking priors

Suppose I learned that all my original priors were consistent and regular but produced by an evil demon bent upon misleading me.

The subjective Bayesian answer is that since consistent and regular original priors are not subject to rational evaluation, I do not need to engage in any radical uprooting of my thinking. All I need to do is update on this new and interesting fact about my origins. I would probably become more sceptical, but all within the confines of my original priors, which presumably include such things as the conditional probability that I have a body given that I seem to have a body but there is an evil demon bent upon misleading me.

This answer seems wrong. So much the worse for subjective Bayesianism. A radical uprooting would be needed. It would be time to sit back, put aside preconceptions, and engage in some fallibilist version of the Cartesian project of radical rethinking. That project might be doomed, but it would be my only hope.

Now, what if instead of the evil demon, I learned of a random process independent of truth as the ultimate origin of my priors. I think the same thing would be true. It would be a time to be brave and uproot it all.

I think something similar is true piece by piece, too. I have a strong moral intuition that consequentialism is false. But suppose that I learned that when I was a baby, a mad scientist captured me and flipped a coin with the plan that on heads a high prior in anti-consequentialism would be induced and on tails it would be a high prior in consequentialism instead. I would have to rethink consequentialism. I couldn’t just stick with the priors.

Socrates and thinking for yourself

There is a popular picture of Socrates as someone inviting us to think for ourselves. I was just re-reading the Euthyphro, and realizing that the popular picture is severely incomplete.

Recall the setting. Euthyphro is prosecuting a murder case against his father. The case is fraught with complexity and which a typical Greek would think should not be brought for multiple reasons, the main one being that the accused is the prosecutor’s father and we have very strong duties towards parents, and a secondary one being that the killing was unintentional and by neglect. Socrates then says:

most men would not know how they could do this and be right. It is not the part of anyone to do this, but of one who is far advanced in wisdom. (4b)

We learn in the rest of the dialogue that Euthyphro is pompous, full of himself, needs simple distinctions to be explained, and, to understate the point, is far from “advanced in wisdom”. And he thinks for himself, doing that which the ordinary Greek thinks to be a quite bad idea.

The message we get seems to be that you should abide by cultural norms, unless you are “far advanced in wisdom”. And when we add the critiques of cultural elites and ordinary competent craftsmen from the Apology, we see that almost no one is “advanced in wisdom”. The consequence is that we should not depart significantly from cultural norms.

This reading fits well with the general message we get about the poets: they don’t know how to live well, but they have some kind of a connection with the gods, so presumably we should live by their message. Perhaps there is an exception for those sufficiently wise to figure things out for themselves, but those are extremely rare, while those who think themselves wise are extremely common. There is a great risk in significantly departing from the cultural norms enshrined in the poets—for one is much more likely to be one of those who think themselves wise than one of those who are genuinely wise.

I am not endorsing this kind of complacency. For one, those of us who are religious have two rich sets of cultural norms to draw on, a secular set and a religious one, and in our present Western setting the two tend to have sufficient disagreement that complacency is not possible—one must make a choice in many cases. And then there is grace.

From strict anti-anti-Bayesianism to strict propriety

In my previous post, I showed that a continuous anti-anti-Bayesian accuracy scoring rule on probabilities defined on a sub-algebra of events satisfying the technical assumption that the full algebra contains an event logically independent of the sub-algebra is proper. However, I couldn’t figure out how to prove strict propriety given strict anti-anti-Bayesianism. I still don’t, but I can get closer.

First, a definition. A scoring rule on probabilities on the sub-algebra H is strictly anti-anti-Bayesian provided that one expects it to penalize non-trivial binary anti-Bayesian updates. I.e., if A is an event with prior probability p neither zero nor one, and Bayesian conditionalization on A (or, equivalently, on Ac) modifies the probability of some member of H, then the p-expected score of finding out whether A or Ac holds and conditionalizing on that is strictly better than if one adopted the procedure of conditionalizing on the complement of the actually obtaining event.

Suppose we have continuity, the technical assumption and anti-anti-Bayesianism. My previous post shows that the scoring rule is proper. I can now show that it is strictly proper if we strengthen anti-anti-Bayesianism to strict anti-anti-Bayesianism and add the technical assumption that the scoring rule satisfies the finiteness condition that Eps(p) is finite for any probability p on H. Since we’re working with accuracy scoring rules and these take values in [−∞,M] for finite M, the only way to violate the finiteness condition is to have Eps(p) = − ∞, which would mean that s is very pessimistic about p: by p’s own lights, the expected score of p is infinitely bad. The finiteness condition thus rules out such maximal pessimism.

Here is a sketch of the proof. Suppose we do not have strict propriety. Then there will be two distinct probabilities p and q such that Eps(p) ≤ Eps(q). By propriety, the inequality must be an equality. By Proposition 9 of a recent paper of mine, it follows that s(p) = s(q) everywhere (this is where the finiteness condition is used). Now let r = (p+q)/2. Using the trick from the Appendix here, we can find a probability p′ on the full algebra and an event Z such that r is the restriction of p′ to H, p is the restriction of the Bayesian conditionalization of p′ on Z to H, and q is the restriction of the Bayesian conditionalization of q on Zc to H. Then the scores of p and q will be the same, and hence the scores of Bayesian and anti-Bayesian conditionalization on finding out whether Z or Zc is actual are guaranteed to be the same, and this violates strict anti-anti-Bayesianism.

One might hope that this will help those who are trying to construct accuracy arguments for probabilism—the doctrine that credences should be probabilities. The hitch in those arguments is establishing strict propriety. However, I doubt that what I have helps. First, I am working in a sub-algebra setting. Second, and more importantly, I am working in a context where scoring rules are defined only for probabilities, and so the strict propriety inequality I get is only for scores of pairs of probabilities, while the accuracy arguments require strict propriety for pairs of credences exactly one of which is not a probability.

Wednesday, February 1, 2023

From anti-anti-Bayesianism to propriety

Let’s work in the setting of my previous post, including technical assumption (3), and also assume Ω is finite and that our scoring rules are all continuous.

Say that an anti-Bayesian update is when you take a probability p, receive evidence A, and make your new credence be p(⋅|Ac), i.e., you conditionalize on the complement of the evidence. Anti-Bayesian update is really stupid, and you shouldn’t get rewarded for it, even if all you care about are events other than A and Ac.

Say that an H-scoring rule s is anti-anti-Bayesian providing that the expected score of a policy of anti-Bayesian update on an event A whose prior probability is neither zero nor one is never better than the expected score of a policy of Bayesian update.

I claim that given continuity, anti-anti-Bayesianism implies that the scoring rule is proper.

First, note that by continuity, if it’s proper at all the regular probabilities (ones that do not assign 0 or 1 to any non-empty set) on H, then it’s proper (I am assuming we handle infinities like in this paper, and use Lemma 1 there).

So all we need to do is show that it’s proper at all the regular probabilities on H. Let p be a regular probability, and contrary to propriety suppose that Eps(p) < Eps(q) for another probability q. For t ≥ 0, let pt be such that tq + (1−t)pt = p, i.e., let pt = (p−tq)/(1−t). Since p is regular, for t sufficiently small, pt will be a probability (all we need is that it be non-negative). Using the trick from the Appendix of the previous post with q in place of p1 and pt in place of p2, we can set up a situation where the Bayesian update will have expected score:

- tEqs(q) + (1−t)Epts(pt)

and the anti-Bayesian update will have the expected score:

- tEqs(pt) + (1−t)Epts(q).

Given anti-anti-Bayesianism, we must have

- tEqs(pt) + (1−t)Epts(q) ≤ tEqs(q) + (1−t)Epts(pt).

Letting t → 0 and using continuity, we get:

- Ep0s(q) ≤ Ep0s(p0).

But p0 = p. So we have propriety.

Open-mindedness and propriety

Suppose we have a probability space Ω with algebra F of events, and a distinguished subalgebra H of events on Ω. My interest here is in accuracy H-scoring rules, which take a (finitely-additive) probability assignment p on H and assigns to it an H-measurable score function s(p) on Ω, with values in [−∞,M] for some finite M, subject to the constraint that s(p) is H-measurable. I will take the score of a probability assignment to represent the epistemic utility or accuracy of p.

For a probability p on F, I will take the score of p to be the score of the restriction of p to H. (Note that any finitely-additive probability on H extends to a finitely-additive probability on F by Hahn-Banach theorem, assuming Choice.)

The scoring rule s is proper provided that Eps(q) ≤ Eps(p) for all p and q, and strictly so if the inequality is strict whenever p ≠ q. Propriety says that one never expects a different probability from one’s own to have a better score (if one did, wouldn’t one have switched to it?).

Say that the scoring rule s is open-minded provided that for any probability p on F and any finite partition V of Ω into events in F with non-zero p-probability, the p-expected score of finding out where in V we are and conditionalizing on that is at least as big as the current p-expected score. If the scoring rule is open-minded, then a Bayesian conditionalizer is never precluded from accepting free information. Say that the scoring rule s is strictly open-minded provided that the p-expected score increases of finding out where in V we are and conditionalizing increases whenever there is at least one event E in V such that p(⋅|E) differs from p on H and p(E) > 0.

Given a scoring rule s, let the expected score function Gs on the probabilities on H be defined by Gs(p) = Eps(p), with the same extension to probabilities on F as scores had.

It is well-known that:

- The (strict) propriety of s entails the (strict) convexity of Gs.

It is easy to see that:

- The (strict) convexity of Gs implies the (strict) open-mindedness of s.

Neither implication can be reversed. To see this, consider the single-proposition case, where Ω has two points, say 0 and 1, and H and F are the powerset of Ω, and we are interested in the proposition that one of these point, say 1, is the actual truth. The scoring rule s is then equivalent to a pair of functions T and F on [0,1] where T(x) = s(px)(1) and F(x) = s(px)(0) where px is the probability that assigns x to the point 1. Then Gs corresponds to the function xT(x) + (1−x)F(x), and each is convex if and only if the other is.

To see that the non-strict version of (1) cannot be reversed, suppose (T,F) is a non-trivial proper scoring rule with the limit of F(x)/x as x goes to 0 finite. Now form a new scoring rule by letting T * (x) = T(x) + (1−x)F(x)/x. Consider the scoring rule (T*,0). The corresponding function xT * (x) is going to be convex, but (T*,0) isn’t going to be proper unless T* is constant, which isn’t going to be true in general. The strict version is similar.

To see that (2) cannot be reversed, note that the only non-trivial partition is {{0}, {1}}. If our current probability for 1 is x, the expected score upon learning where we are is xT(1) + (1−x)F(0). Strict open-mindedness thus requires precisely that xT(x) + (1−x)F(x) < xT(1) + (1−x)F(0) whenever x is neither 0 nor 1. It is clear that this is not enough for convexity—we can have wild oscillations of T and F on (0,1) as long as T(1) and F(1) are large enough.

Nonetheless, (2) can be reversed (both in the strict and non-strict versions) on the following technical assumption:

- There is an event Z in F such that Z ∩ A is a non-empty proper subset of A for every non-empty member of H.

This technical assumption basically says that there is a non-trivial event that is logically independent of everything in H. In real life, the technical assumption is always satisfied, because there will always be something independent of the algebra H of events we are evaluating probability assignments to (e.g., in many cases Z can be the event that the next coin toss by the investigator’s niece will be heads). I will prove that (2) can be reversed in the Appendix.

It is easy to see that adding (3) to our assumptions doesn’t help reverse (1).

Since open-mindedness is pretty plausible to people of a Bayesian persuasion, this means that convexity of Gs can be motivated independently of propriety. Perhaps instead of focusing on propriety of s as much as the literature has done, we should focus on the convexity of Gs?

Let’s think about this suggestion. One of the most important uses of scoring rules could be to evaluate the expected value of an experiment prior to doing the experiment, and hence decide which experiment we should do. If we think of an experiment as a finite partition V of the probability space with each cell having non-zero probability by one’s current lights p, then the expected value of the experiment is:

- ∑A ∈ Vp(A)EpAs(pA) = ∑A ∈ Vp(A)Gs(pA),

where pA is the result of conditionalizing p on A. In other words, to evaluate the expected values of experiments, all we care about is Gs, not s itself, and so the convexity of Gs is a very natural condition: we are never oligated to refuse to know the results of free experiments.

However, at least in the case where Ω is finite, it is known that any (strictly) convex function (maybe subject to some growth conditions?) is equal to Gu for a some (strictly) proper scoring rule u. So we don’t really gain much generality by moving from propriety of s to convexity of Gs. Indeed, the above observations show that for finite Ω, a (strictly) open-minded way of evaluating the expected epistemic values of experiments in a setting rich enough to satisfy (3) is always generatable by a (strictly) proper scoring rule.

In other words, if we have a scoring rule that is open-minded but not proper, we can find a proper scoring rule that generates the same prospective evaluations of the value of experiments (assuming no special growth conditions are needed).

Appendix: We now prove the converse of (2) assuming (3).

Assume open-mindedness. Let p1 and p2 be two distinct probabilities on H and let t ∈ (0,1). We must show that if p = tp1 + (1−t)p2, then

- Gs(p) ≤ tGs(p1) + (1−t)Gs(p2)

with the inequality strict if the open-mindedness is strict. Let Z be as in (3). Define

p′(A∩Z) = tp1(A)

p′(A∩Zc) = (1−t)p2(A)

p′(A) = p(A)

for any A ∈ H. Then p′ is a probability on the algebra generated by H and Z extending p. Extend it to a probability on F by Hahn-Banach. By open-mindedness:

- Gs(p′) ≤ p′(Z)Ep′Zs(p′Z) + p′(Zc)Ep′Zcs(p′Zc).

But p′(Z) = p(Ω∩Z) = t and p′(Zc) = 1 − t. Moreover, p′Z = p1 on H and p′Zc = p2 on H. Since H-scores don’t care what the probabilities are doing outside of H, we have s(p′Z) = s(p1) and s(p′Zc) = s(p2) and Gs(p′) = Gs(p). Moreover our scores are H-measurable, so Ep′Zs(p1) = Ep1s(p1) and Ep′Zcs(p2) = Ep2s(p2). Thus (9) becomes:

- Gs(p) ≤ tGs(p1) + (1−t)Gs(p2).

Hence we have convexity. And given strict open-mindedness, the inequality will be strict, and we get strict convexity.